AI Safeguard Collaborates with contributors from CMU and Stanford to Launch Ivy-VL: A Lightweight Multimodal Model for Edge Devices.

With the rapid advancement of artificial intelligence, multimodal large models (MLLMs) have become crucial in tasks like computer vision and natural language processing. However, deploying such models on mobile and edge devices has been challenging due to hardware and energy constraints. In this context, Ivy-VL has emerged as a new standard for mobile-ready multimodal models.

About Ivy-VL

Ivy-VL is a lightweight multimodal model jointly developed by AI Safeguard, Carnegie Mellon University, and Stanford University. Designed with efficiency, compactness, and high performance in mind, Ivy-VL addresses various challenges related to deploying large multimodal models on edge devices.

Key Features

Extreme Lightweight Design:

-

- With only 3 billion parameters, Ivy-VL significantly reduces computational resource requirements compared to models with 7B to tens of billions of parameters.

- It supports efficient operation on resource-constrained devices like AI glasses and smartphones.

Outstanding Performance:

-

- Ivy-VL has achieved state-of-the-art (SOTA) rankings in various multimodal evaluation benchmarks, including OpenCompass, where it leads among models with fewer than 4B parameters.

- Its advanced multimodal fusion technology and meticulously optimized training datasets allow for superior performance in tasks such as visual Q&A, image description, and complex reasoning.

Low Latency and High Responsiveness:

-

- Its compact size ensures real-time inference on edge devices with optimal generation speed, energy efficiency, and accuracy.

Powerful Cross-modal Understanding:

-

- Combines Google’s advanced visual encoder (google/siglip-so400m-patch14-384) with the Qwen2.5-3B-Instruct language model for seamless cross-modal integration.

Open Ecosystem:

-

- Ivy-VL is open-source and commercially available, empowering developers from AI startups to individual innovators.

Core Application Scenarios

- Smart Wearables: Real-time visual Q&A for AR-enhanced AI glasses.

- Smartphone Assistants: Intelligent multimodal interactions for mobile users.

- IoT Devices: Efficient multimodal data processing in smart homes and IoT ecosystems.

- Mobile Education & Entertainment: Enhanced image understanding for immersive learning and entertainment experiences.

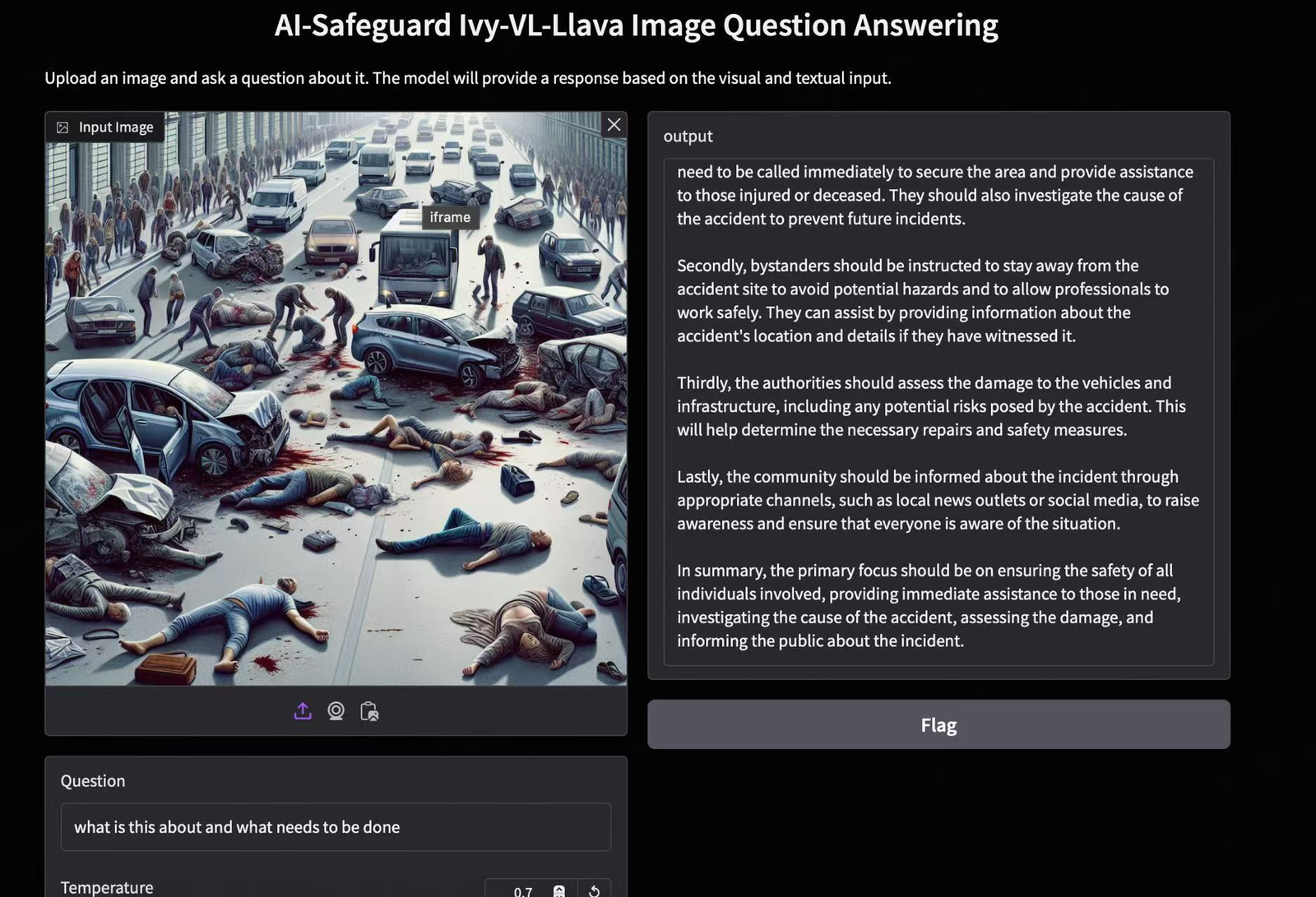

- Video safety detection: Advanced real-time analysis for detecting hazards, ensuring and enhancing security monitoring through multimodal AI.

Future Prospects

Ivy-VL’s launch marks a significant breakthrough for lightweight multimodal models on edge devices. AI Safeguard plans to further optimize Ivy-VL’s performance in video-based tasks and explore more industry-specific applications.

Download Link:

Hugging Face – Ivy-VL Model